When to Implement Data Modeling, Orchestration, and Semantic Layers as Your Business Scales

When to Implement Data Modeling, Orchestration, and Semantic Layers as Your Business Scales

When to Implement Data Modeling, Orchestration, and Semantic Layers as Your Business Scales

News & Insights

News & Insights

News & Insights

Apr 13, 2025

Apr 13, 2025

Apr 13, 2025

As companies scale, their data challenges grow. This article provides a practical guide to implementing data ingestion, modeling, orchestration, and semantic layers at the right time. By aligning data architecture with each growth stage, businesses can avoid common pitfalls and build a scalable, reliable foundation for data-driven decision-making.

Introduction

As organizations grow and their analytics requirements evolve, they inevitably face common pain points in data management—such as inconsistent metrics, frequent pipeline failures, and complex data transformations. Addressing these early with structured implementation of data ingestion, data modeling, orchestration, and semantic layers significantly enhances scalability, reliability, and accuracy.

Data Ingestion is the process of collecting data from various sources and preparing it for storage and analysis.

Data Modeling involves structuring and transforming raw data into a usable, consistent format to support analysis and reporting.

Orchestration manages and automates data workflows, ensuring data tasks run smoothly, reliably, and on schedule.

Semantic Layer provides a centralized, consistent definition of business metrics and terms, ensuring clarity and alignment across the organization.

But when should an organization invest in each component? But when should an organization invest in these components? This article offers clear guidelines tailored to each growth phase, helping you identify when to strategically implement each component.

Deep Dive: Stages of Data Stack Evolution

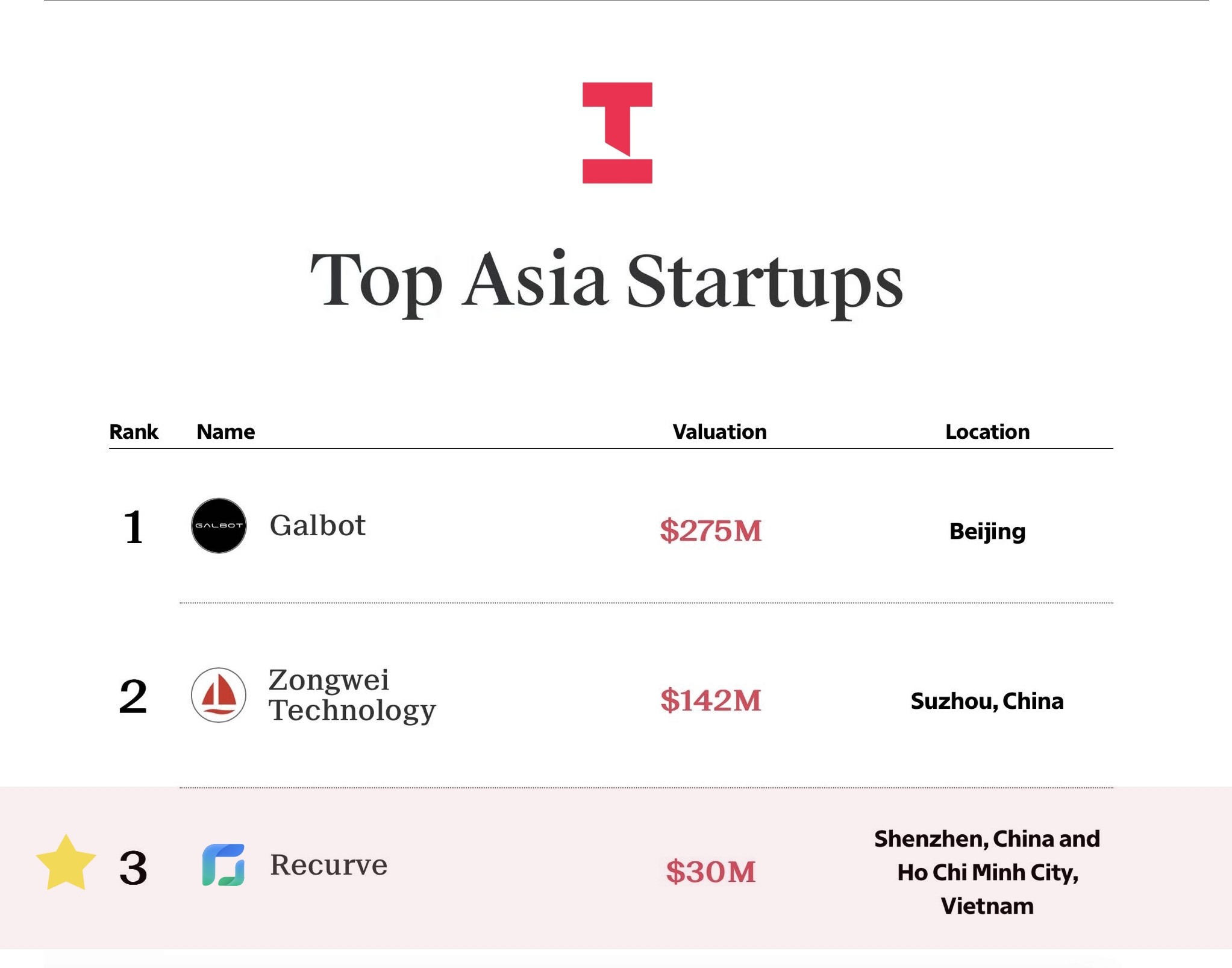

Figure 1: Summary Table: Evolution of the Modern Data Stack

Early stage (Scrappy and Focused)

Business & Team Profile

At the early stage, companies typically have small, lean teams, often founder-led with just a handful of employees. Analytics tasks are generally managed by business generalists or the founding members who balance these responsibilities alongside their core duties. With the primary focus on achieving product-market fit and initial sales, data priorities revolve around essential metrics like daily revenue or website traffic, which are vital for immediate business decisions.

Data Tools & Architecture

During this initial phase, the data infrastructure remains intentionally minimalistic to conserve resources and complexity. Businesses rely heavily on built-in analytics features provided by existing tools and platforms. For instance, an online store may utilize Shopify’s native reports or Google Analytics for basic insights, exporting additional data into spreadsheets as needed. Data usually resides within isolated SaaS applications, with minimal or no formal integration, typically managed through manual CSV exports or simple automation tools.

Data Ingestion: Mostly manual or via simple connectors. Many startups stick to plug-and-play analytics solutions like Mixpanel or Amplitude for product data, which require minimal setup. These tools collect events and provide dashboards without a custom pipeline.

Modeling: Little to no formal data modeling. Any transformations happen in Excel, or via basic SQL queries run directly against production data if needed. The startup may define a few key metrics in a spreadsheet.

Orchestration: None. Data refresh processes are manual or scheduled by the SaaS tools. There are no Airflow or cron jobs – the “orchestration” is a person running a report or exporting data when needed.

Semantic Layer: None. Business definitions of metrics are usually in the heads of the founders or a simple Google Doc. For example, “daily active users” or “gross revenue” might be calculated differently by different people until later – there’s no centralized metric definition yet.

Early Growth Stage (Centralizing and Building Foundations)

Business & Team Profile

In the early growth phase, the company has gained traction – perhaps securing a Series A/B funding and growing customer base. Data-driven decisions become more critical, and the team expands accordingly. Often, this is when the first dedicated data hire arrives (or a tech-savvy analyst steps up). The data team is still small (maybe 1–3 people) and usually centralized, serving the whole company’s needs. For example, a newly hired data analyst or analytics engineer is now responsible for bringing order to the chaos. The business demands more sophisticated analysis (cohort retention, CAC vs. LTV, inventory forecasting), pushing the limits of manual methods.

New Challenges Prompting Change: At this stage, pain points from the startup phase intensify:

Operational Data Not Designed for Analytics: As analytics demand increases, teams quickly realize operational systems store data optimized for transactions, not analysis. Data often requires significant transformation and cleaning, causing delays and inaccuracies.

Data Silos Limit Insights: You can no longer afford separate islands of data. For instance, marketing, sales, and customer support data need to be combined to understand customer lifetime value or omnichannel behavior. The proliferation of SaaS tools leads to fragmented data

Reporting Backlogs: Executives and functional teams are now asking complex questions (“Which marketing channel drives the highest repeat purchases?”). The lone data person is inundated with requests, and answering them with spreadsheets is too slow.

Data Tools & Architecture

Given these growing pains, the company typically invests in a more robust data infrastructure. The modern data stack components start falling into place, focusing on centralization and automation:

Data Ingestion: Rather than manual exports, teams adopt ETL/ELT tools. For example, the company might use a service like Fivetran or Airbyte to automatically pull data from SaaS sources (e.g. Shopify, Google Analytics, Facebook Ads) into a central repository..

Modeling (Transformation Layer): With data centralized, the next step is to clean, join, and organize it for analysis. Early-growth companies introduce a transformation/modeling layer to manage SQL transformations in the warehouse. Data modeling means creating structured tables/views (e.g. a fact table of orders, a dimension table of customers) and business metrics definitions. This is when the business logic moves out of ad-hoc spreadsheets into version-controlled SQL code. Data modeling is typically introduced at this stage because reporting needs are more complex and require consistent, reusable calculations.

Orchestration: With a few data pipelines now in play (ingesting from multiple sources, running data models on a schedule), there’s a growing need to coordinate and automate workflows. In early growth, the data team might use simple scheduling – e.g. relying on the ETL tool’s scheduler or even cron jobs. Orchestration becomes important when you need dependencies and monitoring – for example, ensuring that “daily data refresh” runs reliably at 7 AM. Without an orchestration tool, the team lacks visibility into pipeline failures or delays. Early on, you might manage with cron or manual runs, but once you have >1–2 pipelines, it’s wise to plan for orchestration.

Semantic Layer: Many early-growth companies still rely on the BI tool or data models for metric definitions. A dedicated semantic layer (a centralized metrics store or business glossary) typically comes a bit later when multiple teams/tools are consuming data.

Team Evolution

The early growth data team is usually one or two generalists evolving into defined roles. You’ll see the beginning of specialization:

A data engineer (or the closest thing to one) starts focusing on infrastructure – setting up the warehouse, building pipelines, ensuring data flows correctly. Often this is still the same person as the analyst, just wearing multiple hats.

A data analyst concentrates on reporting and analysis – building dashboards, doing deep-dive analyses for business questions. If the first hire was an analyst, the next might be a more engineering-oriented person to handle the back-end data prep.

These folks still sit together as a central data team, serving all departments. The centralization ensures consistent practices; the trade-off is they can become a bottleneck as demand increases. But in early growth, centralization is efficient given limited headcount.

Scaling Stage (Advanced Stack for a Scaling Business)

Business & Team Profile

In the scaling stage, the company is now a mid-sized business on a fast growth trajectory. Data has firmly become a strategic asset: leadership expects data-driven insights for optimizing marketing spend, personalization, supply chain, product development – virtually every function. To meet these needs, the data team grows significantly and diversifies in skills. The data team may still be centralized under a Head of Data/Analytics, but they collaborate with every department and might start embedding analysts in business units to keep up with domain-specific requests.

Data Tools & Architecture

At this stage, the modern data stack “fills out” with more sophisticated technology, addressing challenges of greater volume, velocity, and variety of data:

Data Ingestion and Integration: The number of data sources explodes – not just SaaS apps, but also internal microservices and third-party data feeds. Batch ETL continues, but there’s also a push for real-time or streaming data ingestion.

Modeling (Transformation) Enhancements: By this stage, data modeling is an established practice, and the transformation layer becomes more complex to maintain. The organization likely has hundreds or thousands of data models or other ETL scripts. The modeling efforts focus on creating stable, reusable data sets for analytics (sometimes called “core” models or Kimball-style star schemas for key subject areas like orders, inventory, customers). The scaling company might invest in modular and well-documented data models, enforcing company-wide conventions for things like naming, grain of data, and calculations. For example, how to compute “customer lifetime value” is defined in the transformation code and reused, rather than each analyst writing their own SQL.

Orchestration: If an orchestrator like Airflow wasn’t introduced in early growth, it almost certainly is in the scaling phase. The number of pipelines (jobs) to manage is significant: data ingestion jobs for dozens of sources, transformation jobs, ML model training jobs, and syncs back to systems. A centralized orchestration tool is critical for reliability and visibility. Airflow is a popular choice at this stage (often self-hosted or using a managed service like Astronomer), given its maturity and plugin ecosystem.

Semantic Layer and Self-Service Analytics: As the company grows, consistent metrics definitions and enabling business users to self-serve become high priorities. This is typically when a robust semantic layer is introduced (if not already in place via a BI tool). A semantic layer is essentially a central catalog of business metrics and dimensions that all tools draw from, ensuring that “Revenue” or “Active Customers” mean the same thing everywhere. These can sit between the warehouse and various BI tools, providing a centralized metric definition. The goal is to avoid a scenario where Finance uses one calculation in Excel, Marketing another in Tableau – instead, everyone queries the semantic layer so definitions don’t drift.

Team Evolution

During the scaling phase, the data team structure often shifts from a purely centralized model to a hybrid model. The central data team still maintains the core infrastructure (the warehouse, pipelines, governance), but embedded analysts or domain-specific data teams start to appear in various departments (marketing, finance, product, etc.). This addresses the earlier bottleneck problem: now each department might have its own analyst or analytics team member who knows their data and can self-serve for many needs, while the central team ensures they have clean data and robust tools to do so.

Mature Enterprise Stage (Robust, Governed, and Real-Time)

Business & Team Profile

In the mature enterprise stage, the company is a large, established player – perhaps a public company or a dominant brand in its sector. The scale of data is enormous. By now, data is deeply embedded in every facet of the business, and there’s likely a Chief Data Officer or Chief Analytics Officer ensuring data strategy aligns with corporate strategy.

Data Tools & Architecture

The data team in an enterprise is large and often decentralized. You might have a central data platform group maintaining core infrastructure and governance, and semi-autonomous data teams aligned to business domains (marketing analytics team, merchandising analytics team, etc.) – akin to a data mesh organizational approach where each domain handles their data as a product. At this stage, the modern data stack is fully evolved and likely incorporates cutting-edge practices:

Data Architecture: The architecture likely spans multiple systems and possibly multiple cloud environments. Many enterprises adopt a data mesh or distributed architecture approach: different business units manage their own pipelines and datasets (with their own data engineers/analysts), but under a central governance framework to ensure interoperability. Data is treated as a product. The central team ensures standards and tooling: they provide the data platform as a self-service platform for the domain teams. They also enforce data governance policies across all teams (security, privacy, compliance).

Ingestion & Processing: The enterprise likely has a mix of streaming, batch, and micro-batch processing all coexisting.

Modeling & Semantic Mastery: By the time a company is a mature enterprise, its data modeling layers are highly sophisticated. The enterprise will have:

Master Data Management (MDM): Possibly separate systems to maintain master records. MDM solutions ensure that across all data products, certain key entities (customer, product, store) are consistently identified and attributed.

Semantic Consistency: A fully fleshed-out semantic layer or business glossary that is adopted company-wide. This could be an extension of the BI tool semantics or a standalone semantic layer that multiple BI tools feed from.

Complex Data Models: The transformations may now include complex business logic that has been accumulated over years. It’s crucial that these are well-documented and maintained. The enterprise may use a combination of SQL, Python, and other tools for transformations.

The data team might introduce domain-oriented data modeling – where each business domain has its own schema and models, which then feed into enterprise-wide models. This aligns with the data mesh concept of domains owning their data transformations too.

Team Evolution

The team in a mature enterprise is massive and structured. Building on the hybrid model from scaling, it might now resemble a federated model or data mesh organization:

Each domain (Marketing, Supply Chain, Ecommerce, Stores, etc.) has a data/analytics team embedded that includes data engineers, analysts, perhaps data scientists, and they produce domain-specific data products. They report into their function but also have a dotted line to central data governance.

A Central Data Platform & Governance team provides the underlying platforms and supports domain teams in using them. They also handle enterprise-wide concerns like governance, privacy, and cross-domain data integration.

There are likely leadership roles such as Data Product Owners for each major data product or domain, responsible for maximizing value from that domain’s data. And a Data Architect or Architecture board that ensures all these data products can interoperate and fit into the big picture

Summary

In summary, the modern data stack in any company matures from simple and scrappy to sophisticated and strategic. By approaching this evolution in milestone stages, you can align your data infrastructure with your company’s growth, ensuring that at each step, data serves the business effectively. By following the progression outlined above, a company can navigate the modern data stack journey with confidence and pragmatism, laying the groundwork for sustained, data-driven success.

Introduction

As organizations grow and their analytics requirements evolve, they inevitably face common pain points in data management—such as inconsistent metrics, frequent pipeline failures, and complex data transformations. Addressing these early with structured implementation of data ingestion, data modeling, orchestration, and semantic layers significantly enhances scalability, reliability, and accuracy.

Data Ingestion is the process of collecting data from various sources and preparing it for storage and analysis.

Data Modeling involves structuring and transforming raw data into a usable, consistent format to support analysis and reporting.

Orchestration manages and automates data workflows, ensuring data tasks run smoothly, reliably, and on schedule.

Semantic Layer provides a centralized, consistent definition of business metrics and terms, ensuring clarity and alignment across the organization.

But when should an organization invest in each component? But when should an organization invest in these components? This article offers clear guidelines tailored to each growth phase, helping you identify when to strategically implement each component.

Deep Dive: Stages of Data Stack Evolution

Figure 1: Summary Table: Evolution of the Modern Data Stack

Early stage (Scrappy and Focused)

Business & Team Profile

At the early stage, companies typically have small, lean teams, often founder-led with just a handful of employees. Analytics tasks are generally managed by business generalists or the founding members who balance these responsibilities alongside their core duties. With the primary focus on achieving product-market fit and initial sales, data priorities revolve around essential metrics like daily revenue or website traffic, which are vital for immediate business decisions.

Data Tools & Architecture

During this initial phase, the data infrastructure remains intentionally minimalistic to conserve resources and complexity. Businesses rely heavily on built-in analytics features provided by existing tools and platforms. For instance, an online store may utilize Shopify’s native reports or Google Analytics for basic insights, exporting additional data into spreadsheets as needed. Data usually resides within isolated SaaS applications, with minimal or no formal integration, typically managed through manual CSV exports or simple automation tools.

Data Ingestion: Mostly manual or via simple connectors. Many startups stick to plug-and-play analytics solutions like Mixpanel or Amplitude for product data, which require minimal setup. These tools collect events and provide dashboards without a custom pipeline.

Modeling: Little to no formal data modeling. Any transformations happen in Excel, or via basic SQL queries run directly against production data if needed. The startup may define a few key metrics in a spreadsheet.

Orchestration: None. Data refresh processes are manual or scheduled by the SaaS tools. There are no Airflow or cron jobs – the “orchestration” is a person running a report or exporting data when needed.

Semantic Layer: None. Business definitions of metrics are usually in the heads of the founders or a simple Google Doc. For example, “daily active users” or “gross revenue” might be calculated differently by different people until later – there’s no centralized metric definition yet.

Early Growth Stage (Centralizing and Building Foundations)

Business & Team Profile

In the early growth phase, the company has gained traction – perhaps securing a Series A/B funding and growing customer base. Data-driven decisions become more critical, and the team expands accordingly. Often, this is when the first dedicated data hire arrives (or a tech-savvy analyst steps up). The data team is still small (maybe 1–3 people) and usually centralized, serving the whole company’s needs. For example, a newly hired data analyst or analytics engineer is now responsible for bringing order to the chaos. The business demands more sophisticated analysis (cohort retention, CAC vs. LTV, inventory forecasting), pushing the limits of manual methods.

New Challenges Prompting Change: At this stage, pain points from the startup phase intensify:

Operational Data Not Designed for Analytics: As analytics demand increases, teams quickly realize operational systems store data optimized for transactions, not analysis. Data often requires significant transformation and cleaning, causing delays and inaccuracies.

Data Silos Limit Insights: You can no longer afford separate islands of data. For instance, marketing, sales, and customer support data need to be combined to understand customer lifetime value or omnichannel behavior. The proliferation of SaaS tools leads to fragmented data

Reporting Backlogs: Executives and functional teams are now asking complex questions (“Which marketing channel drives the highest repeat purchases?”). The lone data person is inundated with requests, and answering them with spreadsheets is too slow.

Data Tools & Architecture

Given these growing pains, the company typically invests in a more robust data infrastructure. The modern data stack components start falling into place, focusing on centralization and automation:

Data Ingestion: Rather than manual exports, teams adopt ETL/ELT tools. For example, the company might use a service like Fivetran or Airbyte to automatically pull data from SaaS sources (e.g. Shopify, Google Analytics, Facebook Ads) into a central repository..

Modeling (Transformation Layer): With data centralized, the next step is to clean, join, and organize it for analysis. Early-growth companies introduce a transformation/modeling layer to manage SQL transformations in the warehouse. Data modeling means creating structured tables/views (e.g. a fact table of orders, a dimension table of customers) and business metrics definitions. This is when the business logic moves out of ad-hoc spreadsheets into version-controlled SQL code. Data modeling is typically introduced at this stage because reporting needs are more complex and require consistent, reusable calculations.

Orchestration: With a few data pipelines now in play (ingesting from multiple sources, running data models on a schedule), there’s a growing need to coordinate and automate workflows. In early growth, the data team might use simple scheduling – e.g. relying on the ETL tool’s scheduler or even cron jobs. Orchestration becomes important when you need dependencies and monitoring – for example, ensuring that “daily data refresh” runs reliably at 7 AM. Without an orchestration tool, the team lacks visibility into pipeline failures or delays. Early on, you might manage with cron or manual runs, but once you have >1–2 pipelines, it’s wise to plan for orchestration.

Semantic Layer: Many early-growth companies still rely on the BI tool or data models for metric definitions. A dedicated semantic layer (a centralized metrics store or business glossary) typically comes a bit later when multiple teams/tools are consuming data.

Team Evolution

The early growth data team is usually one or two generalists evolving into defined roles. You’ll see the beginning of specialization:

A data engineer (or the closest thing to one) starts focusing on infrastructure – setting up the warehouse, building pipelines, ensuring data flows correctly. Often this is still the same person as the analyst, just wearing multiple hats.

A data analyst concentrates on reporting and analysis – building dashboards, doing deep-dive analyses for business questions. If the first hire was an analyst, the next might be a more engineering-oriented person to handle the back-end data prep.

These folks still sit together as a central data team, serving all departments. The centralization ensures consistent practices; the trade-off is they can become a bottleneck as demand increases. But in early growth, centralization is efficient given limited headcount.

Scaling Stage (Advanced Stack for a Scaling Business)

Business & Team Profile

In the scaling stage, the company is now a mid-sized business on a fast growth trajectory. Data has firmly become a strategic asset: leadership expects data-driven insights for optimizing marketing spend, personalization, supply chain, product development – virtually every function. To meet these needs, the data team grows significantly and diversifies in skills. The data team may still be centralized under a Head of Data/Analytics, but they collaborate with every department and might start embedding analysts in business units to keep up with domain-specific requests.

Data Tools & Architecture

At this stage, the modern data stack “fills out” with more sophisticated technology, addressing challenges of greater volume, velocity, and variety of data:

Data Ingestion and Integration: The number of data sources explodes – not just SaaS apps, but also internal microservices and third-party data feeds. Batch ETL continues, but there’s also a push for real-time or streaming data ingestion.

Modeling (Transformation) Enhancements: By this stage, data modeling is an established practice, and the transformation layer becomes more complex to maintain. The organization likely has hundreds or thousands of data models or other ETL scripts. The modeling efforts focus on creating stable, reusable data sets for analytics (sometimes called “core” models or Kimball-style star schemas for key subject areas like orders, inventory, customers). The scaling company might invest in modular and well-documented data models, enforcing company-wide conventions for things like naming, grain of data, and calculations. For example, how to compute “customer lifetime value” is defined in the transformation code and reused, rather than each analyst writing their own SQL.

Orchestration: If an orchestrator like Airflow wasn’t introduced in early growth, it almost certainly is in the scaling phase. The number of pipelines (jobs) to manage is significant: data ingestion jobs for dozens of sources, transformation jobs, ML model training jobs, and syncs back to systems. A centralized orchestration tool is critical for reliability and visibility. Airflow is a popular choice at this stage (often self-hosted or using a managed service like Astronomer), given its maturity and plugin ecosystem.

Semantic Layer and Self-Service Analytics: As the company grows, consistent metrics definitions and enabling business users to self-serve become high priorities. This is typically when a robust semantic layer is introduced (if not already in place via a BI tool). A semantic layer is essentially a central catalog of business metrics and dimensions that all tools draw from, ensuring that “Revenue” or “Active Customers” mean the same thing everywhere. These can sit between the warehouse and various BI tools, providing a centralized metric definition. The goal is to avoid a scenario where Finance uses one calculation in Excel, Marketing another in Tableau – instead, everyone queries the semantic layer so definitions don’t drift.

Team Evolution

During the scaling phase, the data team structure often shifts from a purely centralized model to a hybrid model. The central data team still maintains the core infrastructure (the warehouse, pipelines, governance), but embedded analysts or domain-specific data teams start to appear in various departments (marketing, finance, product, etc.). This addresses the earlier bottleneck problem: now each department might have its own analyst or analytics team member who knows their data and can self-serve for many needs, while the central team ensures they have clean data and robust tools to do so.

Mature Enterprise Stage (Robust, Governed, and Real-Time)

Business & Team Profile

In the mature enterprise stage, the company is a large, established player – perhaps a public company or a dominant brand in its sector. The scale of data is enormous. By now, data is deeply embedded in every facet of the business, and there’s likely a Chief Data Officer or Chief Analytics Officer ensuring data strategy aligns with corporate strategy.

Data Tools & Architecture

The data team in an enterprise is large and often decentralized. You might have a central data platform group maintaining core infrastructure and governance, and semi-autonomous data teams aligned to business domains (marketing analytics team, merchandising analytics team, etc.) – akin to a data mesh organizational approach where each domain handles their data as a product. At this stage, the modern data stack is fully evolved and likely incorporates cutting-edge practices:

Data Architecture: The architecture likely spans multiple systems and possibly multiple cloud environments. Many enterprises adopt a data mesh or distributed architecture approach: different business units manage their own pipelines and datasets (with their own data engineers/analysts), but under a central governance framework to ensure interoperability. Data is treated as a product. The central team ensures standards and tooling: they provide the data platform as a self-service platform for the domain teams. They also enforce data governance policies across all teams (security, privacy, compliance).

Ingestion & Processing: The enterprise likely has a mix of streaming, batch, and micro-batch processing all coexisting.

Modeling & Semantic Mastery: By the time a company is a mature enterprise, its data modeling layers are highly sophisticated. The enterprise will have:

Master Data Management (MDM): Possibly separate systems to maintain master records. MDM solutions ensure that across all data products, certain key entities (customer, product, store) are consistently identified and attributed.

Semantic Consistency: A fully fleshed-out semantic layer or business glossary that is adopted company-wide. This could be an extension of the BI tool semantics or a standalone semantic layer that multiple BI tools feed from.

Complex Data Models: The transformations may now include complex business logic that has been accumulated over years. It’s crucial that these are well-documented and maintained. The enterprise may use a combination of SQL, Python, and other tools for transformations.

The data team might introduce domain-oriented data modeling – where each business domain has its own schema and models, which then feed into enterprise-wide models. This aligns with the data mesh concept of domains owning their data transformations too.

Team Evolution

The team in a mature enterprise is massive and structured. Building on the hybrid model from scaling, it might now resemble a federated model or data mesh organization:

Each domain (Marketing, Supply Chain, Ecommerce, Stores, etc.) has a data/analytics team embedded that includes data engineers, analysts, perhaps data scientists, and they produce domain-specific data products. They report into their function but also have a dotted line to central data governance.

A Central Data Platform & Governance team provides the underlying platforms and supports domain teams in using them. They also handle enterprise-wide concerns like governance, privacy, and cross-domain data integration.

There are likely leadership roles such as Data Product Owners for each major data product or domain, responsible for maximizing value from that domain’s data. And a Data Architect or Architecture board that ensures all these data products can interoperate and fit into the big picture

Summary

In summary, the modern data stack in any company matures from simple and scrappy to sophisticated and strategic. By approaching this evolution in milestone stages, you can align your data infrastructure with your company’s growth, ensuring that at each step, data serves the business effectively. By following the progression outlined above, a company can navigate the modern data stack journey with confidence and pragmatism, laying the groundwork for sustained, data-driven success.

Introduction

As organizations grow and their analytics requirements evolve, they inevitably face common pain points in data management—such as inconsistent metrics, frequent pipeline failures, and complex data transformations. Addressing these early with structured implementation of data ingestion, data modeling, orchestration, and semantic layers significantly enhances scalability, reliability, and accuracy.

Data Ingestion is the process of collecting data from various sources and preparing it for storage and analysis.

Data Modeling involves structuring and transforming raw data into a usable, consistent format to support analysis and reporting.

Orchestration manages and automates data workflows, ensuring data tasks run smoothly, reliably, and on schedule.

Semantic Layer provides a centralized, consistent definition of business metrics and terms, ensuring clarity and alignment across the organization.

But when should an organization invest in each component? But when should an organization invest in these components? This article offers clear guidelines tailored to each growth phase, helping you identify when to strategically implement each component.

Deep Dive: Stages of Data Stack Evolution

Figure 1: Summary Table: Evolution of the Modern Data Stack

Early stage (Scrappy and Focused)

Business & Team Profile

At the early stage, companies typically have small, lean teams, often founder-led with just a handful of employees. Analytics tasks are generally managed by business generalists or the founding members who balance these responsibilities alongside their core duties. With the primary focus on achieving product-market fit and initial sales, data priorities revolve around essential metrics like daily revenue or website traffic, which are vital for immediate business decisions.

Data Tools & Architecture

During this initial phase, the data infrastructure remains intentionally minimalistic to conserve resources and complexity. Businesses rely heavily on built-in analytics features provided by existing tools and platforms. For instance, an online store may utilize Shopify’s native reports or Google Analytics for basic insights, exporting additional data into spreadsheets as needed. Data usually resides within isolated SaaS applications, with minimal or no formal integration, typically managed through manual CSV exports or simple automation tools.

Data Ingestion: Mostly manual or via simple connectors. Many startups stick to plug-and-play analytics solutions like Mixpanel or Amplitude for product data, which require minimal setup. These tools collect events and provide dashboards without a custom pipeline.

Modeling: Little to no formal data modeling. Any transformations happen in Excel, or via basic SQL queries run directly against production data if needed. The startup may define a few key metrics in a spreadsheet.

Orchestration: None. Data refresh processes are manual or scheduled by the SaaS tools. There are no Airflow or cron jobs – the “orchestration” is a person running a report or exporting data when needed.

Semantic Layer: None. Business definitions of metrics are usually in the heads of the founders or a simple Google Doc. For example, “daily active users” or “gross revenue” might be calculated differently by different people until later – there’s no centralized metric definition yet.

Early Growth Stage (Centralizing and Building Foundations)

Business & Team Profile

In the early growth phase, the company has gained traction – perhaps securing a Series A/B funding and growing customer base. Data-driven decisions become more critical, and the team expands accordingly. Often, this is when the first dedicated data hire arrives (or a tech-savvy analyst steps up). The data team is still small (maybe 1–3 people) and usually centralized, serving the whole company’s needs. For example, a newly hired data analyst or analytics engineer is now responsible for bringing order to the chaos. The business demands more sophisticated analysis (cohort retention, CAC vs. LTV, inventory forecasting), pushing the limits of manual methods.

New Challenges Prompting Change: At this stage, pain points from the startup phase intensify:

Operational Data Not Designed for Analytics: As analytics demand increases, teams quickly realize operational systems store data optimized for transactions, not analysis. Data often requires significant transformation and cleaning, causing delays and inaccuracies.

Data Silos Limit Insights: You can no longer afford separate islands of data. For instance, marketing, sales, and customer support data need to be combined to understand customer lifetime value or omnichannel behavior. The proliferation of SaaS tools leads to fragmented data

Reporting Backlogs: Executives and functional teams are now asking complex questions (“Which marketing channel drives the highest repeat purchases?”). The lone data person is inundated with requests, and answering them with spreadsheets is too slow.

Data Tools & Architecture

Given these growing pains, the company typically invests in a more robust data infrastructure. The modern data stack components start falling into place, focusing on centralization and automation:

Data Ingestion: Rather than manual exports, teams adopt ETL/ELT tools. For example, the company might use a service like Fivetran or Airbyte to automatically pull data from SaaS sources (e.g. Shopify, Google Analytics, Facebook Ads) into a central repository..

Modeling (Transformation Layer): With data centralized, the next step is to clean, join, and organize it for analysis. Early-growth companies introduce a transformation/modeling layer to manage SQL transformations in the warehouse. Data modeling means creating structured tables/views (e.g. a fact table of orders, a dimension table of customers) and business metrics definitions. This is when the business logic moves out of ad-hoc spreadsheets into version-controlled SQL code. Data modeling is typically introduced at this stage because reporting needs are more complex and require consistent, reusable calculations.

Orchestration: With a few data pipelines now in play (ingesting from multiple sources, running data models on a schedule), there’s a growing need to coordinate and automate workflows. In early growth, the data team might use simple scheduling – e.g. relying on the ETL tool’s scheduler or even cron jobs. Orchestration becomes important when you need dependencies and monitoring – for example, ensuring that “daily data refresh” runs reliably at 7 AM. Without an orchestration tool, the team lacks visibility into pipeline failures or delays. Early on, you might manage with cron or manual runs, but once you have >1–2 pipelines, it’s wise to plan for orchestration.

Semantic Layer: Many early-growth companies still rely on the BI tool or data models for metric definitions. A dedicated semantic layer (a centralized metrics store or business glossary) typically comes a bit later when multiple teams/tools are consuming data.

Team Evolution

The early growth data team is usually one or two generalists evolving into defined roles. You’ll see the beginning of specialization:

A data engineer (or the closest thing to one) starts focusing on infrastructure – setting up the warehouse, building pipelines, ensuring data flows correctly. Often this is still the same person as the analyst, just wearing multiple hats.

A data analyst concentrates on reporting and analysis – building dashboards, doing deep-dive analyses for business questions. If the first hire was an analyst, the next might be a more engineering-oriented person to handle the back-end data prep.

These folks still sit together as a central data team, serving all departments. The centralization ensures consistent practices; the trade-off is they can become a bottleneck as demand increases. But in early growth, centralization is efficient given limited headcount.

Scaling Stage (Advanced Stack for a Scaling Business)

Business & Team Profile

In the scaling stage, the company is now a mid-sized business on a fast growth trajectory. Data has firmly become a strategic asset: leadership expects data-driven insights for optimizing marketing spend, personalization, supply chain, product development – virtually every function. To meet these needs, the data team grows significantly and diversifies in skills. The data team may still be centralized under a Head of Data/Analytics, but they collaborate with every department and might start embedding analysts in business units to keep up with domain-specific requests.

Data Tools & Architecture

At this stage, the modern data stack “fills out” with more sophisticated technology, addressing challenges of greater volume, velocity, and variety of data:

Data Ingestion and Integration: The number of data sources explodes – not just SaaS apps, but also internal microservices and third-party data feeds. Batch ETL continues, but there’s also a push for real-time or streaming data ingestion.

Modeling (Transformation) Enhancements: By this stage, data modeling is an established practice, and the transformation layer becomes more complex to maintain. The organization likely has hundreds or thousands of data models or other ETL scripts. The modeling efforts focus on creating stable, reusable data sets for analytics (sometimes called “core” models or Kimball-style star schemas for key subject areas like orders, inventory, customers). The scaling company might invest in modular and well-documented data models, enforcing company-wide conventions for things like naming, grain of data, and calculations. For example, how to compute “customer lifetime value” is defined in the transformation code and reused, rather than each analyst writing their own SQL.

Orchestration: If an orchestrator like Airflow wasn’t introduced in early growth, it almost certainly is in the scaling phase. The number of pipelines (jobs) to manage is significant: data ingestion jobs for dozens of sources, transformation jobs, ML model training jobs, and syncs back to systems. A centralized orchestration tool is critical for reliability and visibility. Airflow is a popular choice at this stage (often self-hosted or using a managed service like Astronomer), given its maturity and plugin ecosystem.

Semantic Layer and Self-Service Analytics: As the company grows, consistent metrics definitions and enabling business users to self-serve become high priorities. This is typically when a robust semantic layer is introduced (if not already in place via a BI tool). A semantic layer is essentially a central catalog of business metrics and dimensions that all tools draw from, ensuring that “Revenue” or “Active Customers” mean the same thing everywhere. These can sit between the warehouse and various BI tools, providing a centralized metric definition. The goal is to avoid a scenario where Finance uses one calculation in Excel, Marketing another in Tableau – instead, everyone queries the semantic layer so definitions don’t drift.

Team Evolution

During the scaling phase, the data team structure often shifts from a purely centralized model to a hybrid model. The central data team still maintains the core infrastructure (the warehouse, pipelines, governance), but embedded analysts or domain-specific data teams start to appear in various departments (marketing, finance, product, etc.). This addresses the earlier bottleneck problem: now each department might have its own analyst or analytics team member who knows their data and can self-serve for many needs, while the central team ensures they have clean data and robust tools to do so.

Mature Enterprise Stage (Robust, Governed, and Real-Time)

Business & Team Profile

In the mature enterprise stage, the company is a large, established player – perhaps a public company or a dominant brand in its sector. The scale of data is enormous. By now, data is deeply embedded in every facet of the business, and there’s likely a Chief Data Officer or Chief Analytics Officer ensuring data strategy aligns with corporate strategy.

Data Tools & Architecture

The data team in an enterprise is large and often decentralized. You might have a central data platform group maintaining core infrastructure and governance, and semi-autonomous data teams aligned to business domains (marketing analytics team, merchandising analytics team, etc.) – akin to a data mesh organizational approach where each domain handles their data as a product. At this stage, the modern data stack is fully evolved and likely incorporates cutting-edge practices:

Data Architecture: The architecture likely spans multiple systems and possibly multiple cloud environments. Many enterprises adopt a data mesh or distributed architecture approach: different business units manage their own pipelines and datasets (with their own data engineers/analysts), but under a central governance framework to ensure interoperability. Data is treated as a product. The central team ensures standards and tooling: they provide the data platform as a self-service platform for the domain teams. They also enforce data governance policies across all teams (security, privacy, compliance).

Ingestion & Processing: The enterprise likely has a mix of streaming, batch, and micro-batch processing all coexisting.

Modeling & Semantic Mastery: By the time a company is a mature enterprise, its data modeling layers are highly sophisticated. The enterprise will have:

Master Data Management (MDM): Possibly separate systems to maintain master records. MDM solutions ensure that across all data products, certain key entities (customer, product, store) are consistently identified and attributed.

Semantic Consistency: A fully fleshed-out semantic layer or business glossary that is adopted company-wide. This could be an extension of the BI tool semantics or a standalone semantic layer that multiple BI tools feed from.

Complex Data Models: The transformations may now include complex business logic that has been accumulated over years. It’s crucial that these are well-documented and maintained. The enterprise may use a combination of SQL, Python, and other tools for transformations.

The data team might introduce domain-oriented data modeling – where each business domain has its own schema and models, which then feed into enterprise-wide models. This aligns with the data mesh concept of domains owning their data transformations too.

Team Evolution

The team in a mature enterprise is massive and structured. Building on the hybrid model from scaling, it might now resemble a federated model or data mesh organization:

Each domain (Marketing, Supply Chain, Ecommerce, Stores, etc.) has a data/analytics team embedded that includes data engineers, analysts, perhaps data scientists, and they produce domain-specific data products. They report into their function but also have a dotted line to central data governance.

A Central Data Platform & Governance team provides the underlying platforms and supports domain teams in using them. They also handle enterprise-wide concerns like governance, privacy, and cross-domain data integration.

There are likely leadership roles such as Data Product Owners for each major data product or domain, responsible for maximizing value from that domain’s data. And a Data Architect or Architecture board that ensures all these data products can interoperate and fit into the big picture

Summary

In summary, the modern data stack in any company matures from simple and scrappy to sophisticated and strategic. By approaching this evolution in milestone stages, you can align your data infrastructure with your company’s growth, ensuring that at each step, data serves the business effectively. By following the progression outlined above, a company can navigate the modern data stack journey with confidence and pragmatism, laying the groundwork for sustained, data-driven success.